I needed to do a thesis for my masters, and I wanted to focus my year-long research on accessibility. My goal at this point was to allow people who could not use the mouse such as those who had carpal tunnel or did not have 5 fingers on their hands to be able to use a desktop computer with ease.

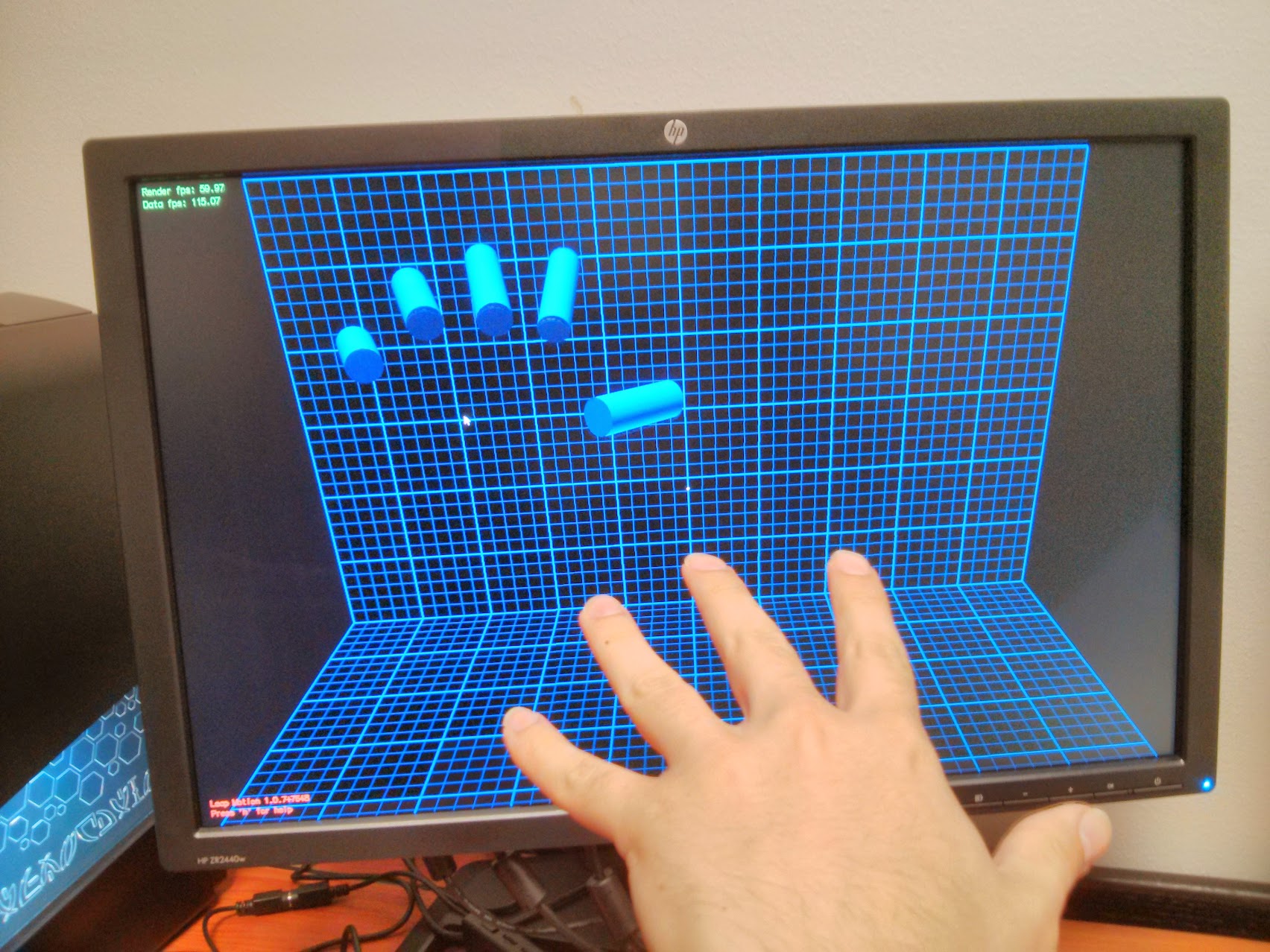

Around this same time, Leap Motion released their flagship product which allowed hand recognition and I saw an opportunity. My supervisor purchased the Leap Motion the first chance he got, and I spent the summer or 2013 trying it out.

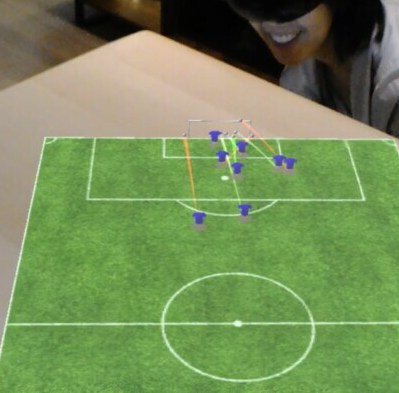

One year’s worth of my research can be summarised as such: gestural interaction suffers from a major usability issue which is fatigue (a.k.a “Gorilla Arm Syndrome”) but this can be resolved by allowing people to rest their elbow on a surface, and modeling their interaction space using a spherical coordinate system (as opposed to Cartesian coordinate system) where their elbow is the center and the length of their arm is the radius.

I also figured out that the recommended position of the Leap Motion was not optimal for this interaction. It worked better when in was slightly tilted, but how tilted? Using a little bit of plasticine (a.k.a. silly putty), sometimes legos, and a few fake hands, I found that tilting the device 30-degrees allowed significantly better recognition.

Another problem was in testing the system. Continuously using one’s hand is needed, but can be tiring. So my advisor got me a box of body parts including a latex hand which I attached to a tripod.

Accessibility

The main goal of this research was actually to allow people who couldn’t use a mouse due to some disability to interact with the PC using gestures. I tried this myself using gloves intended to simulate arthritis, and eventually tested with people with the same issue. During an accessibility conference we discovered that a person who had only 3 fingers on his hand could use our approach comfortably.

Impact

This work resulted started a line of publications, at least two other students did their masters thesis using my code and initial findings as a basis, and my advisor received a well-deserved $150,000 National Science Foundation grant to pursue more research on this topic. An especially impressive feat since my advisor had just started at Baylor University.

My work was previously cited by Leap Motion in Version 2 of their API. Additionally, while I don’t claim sole credit for this, newer versions of the company’s products are tilted by default, rather than lying flat on a surface.

I also gained experience working with a vulnerable population, i.e. those with accessibility issues. I have since incorporated accessibility in all my work.

On a more personal level, this work had a huge impact on me: it helped me understand what it means to develop expertise in a very niche area. I have since reviewed many papers on this topic for some prominent journals and conferences and I’ve been able to consult on related projects.

By biggest claim to fame however is that 8 years after I graduated from Baylor University, my former advisor and I are still the face of Baylor Computer Science.

Role

On my part, I learnt a lot what it takes to do research that spans beyond my participation. This was my first dive into usability and research. I learnt what it takes to start a research group, how to build a lab including what technical practices to encourage and which to let go, how to encourage juniors to do their own research, how to (and how not to) build a steady stream of study participants, and what it takes to do original, publishable research.

My previous skills as a Team Lead in a development team was definitely useful, it allowed me to mentor researchers to both write good code and do research that was meaningful both to them and for public good. This was not my first experience in leadership, but it was certainly my first experience in leading researchers.

Publications

Personal space: User defined gesture space for GUI interaction

A Jude, GM Poor, D Guinness

Models for rested touchless gestural interaction

D Guinness, A Jude, GM Poor, A Dover

An evaluation of touchless hand gestural interaction for pointing tasks with preferred and non-preferred hands

A Jude, GM Poor, D Guinness

Grasp, grab or pinch? identifying user preference for in-air gestural manipulation

A Jude, GM Poor, D Guinness

Reporting and Visualizing Fitts’s Law: Dataset, Tools and Methodologies

A Jude, D Guinness, GM Poor

Gestures with speech for hand-impaired persons

D Guinness, GM Poor, A Jude

Improving gestural interaction with augmented cursors

A Dover, GM Poor, D Guinness, A Jude

Evaluating multimodal interaction with gestures and speech for point and select tasks

A Jude, GM Poor, D Guinness

Bimanual Word Gesture Keyboards for Mid-air Gestures

G Benoit, GM Poor, A Jude

Modeling mid-air gestures with spherical coordinates

D Guinness, A Seung, A Dover, GM Poor, A Jude

Interaction can hurt-Exploring gesture-based interaction for users with Chronic Pain

GM Poor, A Jude